A lot has happened in my personal life since my last post, which explains the dormancy of my site, but I’m now starting to get back in the saddle and work on some interesting stuff.

Storytime

After getting settled in my new apartment after my stint at edX, I was mulling over new project ideas, with a focus on wanting to apply some of the practices learned at edX and continue to build on my career as a software developer. What were some of the goals?

- Learn Github Actions (after being exposed to Travis CI)

- Build a CI/CD pipeline and figure out how to automate testing/deployment (after being exposed to GoCD and ArgoCD)

- Have some static site to show for it (like utilizing Hugo to publish something to Netlify)

After conversations with friends and old coworkers, I decided to do something interesting by doing simple data analysis with bike-sharing data during the Covid-19 period and pushing some visuals to a static site. Who doesn’t like infographics and weird stats?

Also, for me, this project is a good opportunity to talk about a lot of software development sub-topics and what I’m learning along the way, such as the value of testing and linting code, how to do it, or the challenges I’m facing that’s having me pivot or adjust.

A little bit about the project

What problem(s) does it aim to solve?

In conversations with my old coworker that gave me the idea, one challenge in doing analysis with a data set that has more or less an unpredictable endpoint is the acquisition of data. It’s a pretty manual endeavor.

- Check this s3 bucket to see if new data has been published.

- Click the link to get the zip file.

- Unpack it. Add it to your data set. Do a new analysis.

So why not automate that? Hence running a script that does all of that through a GitHub action and then publishing something interesting.

Progress so far and some cool things

You can check out the repository here to see what I’ve been up to. Some notable things that have been accomplished include the pulling of the data during the Covid-19 period and doing testing on code that has been pushed to a repository regardless of branch. Let’s talk about testing and the GH Action associated with it briefly.

Testing with Github Actions

You’ve probably done it all before. To test your code to see if a given function works, you probably did some manual testing. Say for instance you had some function that did the addition of two numbers. You wanted to get a sum of 5, so you might have gone into a ruby or python console, fed it purposefully the numbers 2 and 3, and got what you wanted.

For the next step up, let’s say you wrote a script that ran a set of tests and used something like rspec (for ruby) or unittest or PyTest (for python). You’d still have to manually execute the script every time you updated your code or testing scripts.

Github actions take that manual process and automates it, but it’s definitely not limited to just testing.

To set it up, you can do the following.

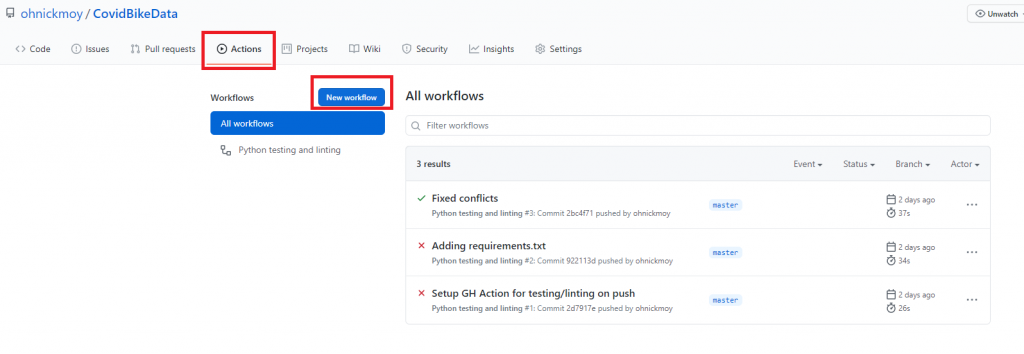

- When you’re in your repo, you can click on actions and then on new workflow.

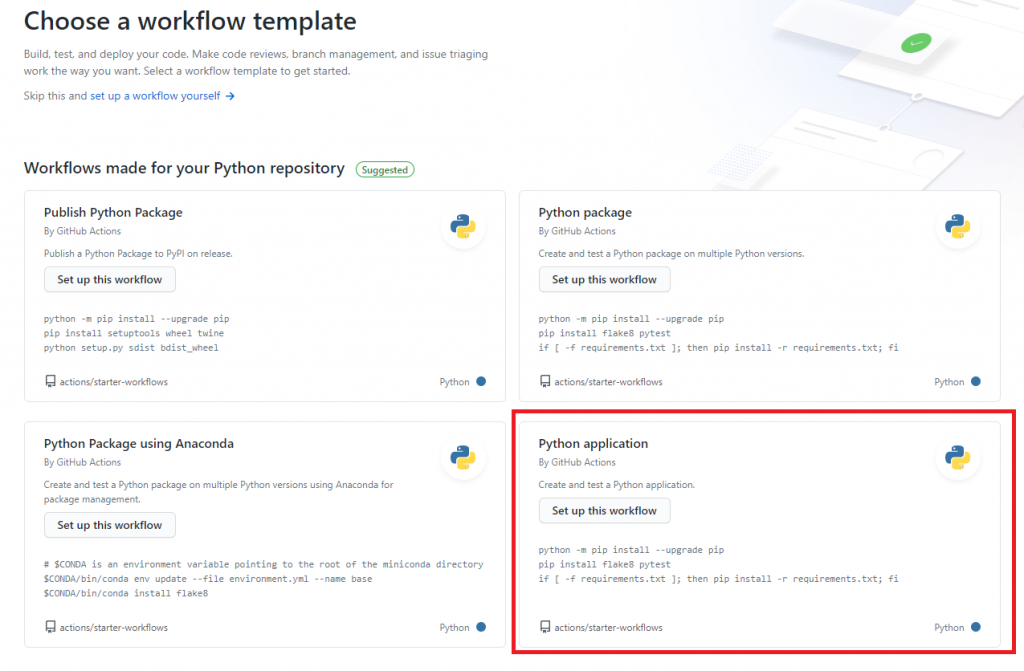

2. For the purposes of testing (and assuming you’re using python), click on Python application.

Actions are pretty customizable to your liking, but the pre-set ones are good for those that are just diving into this stuff.

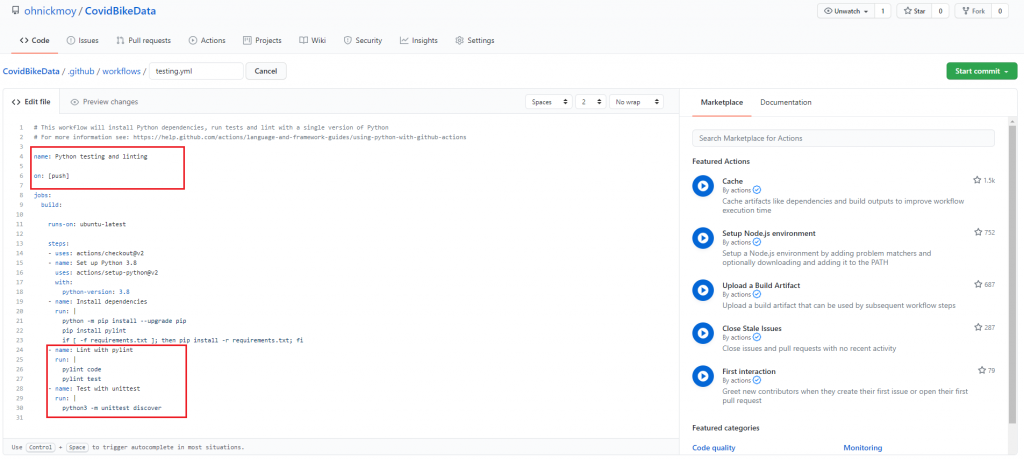

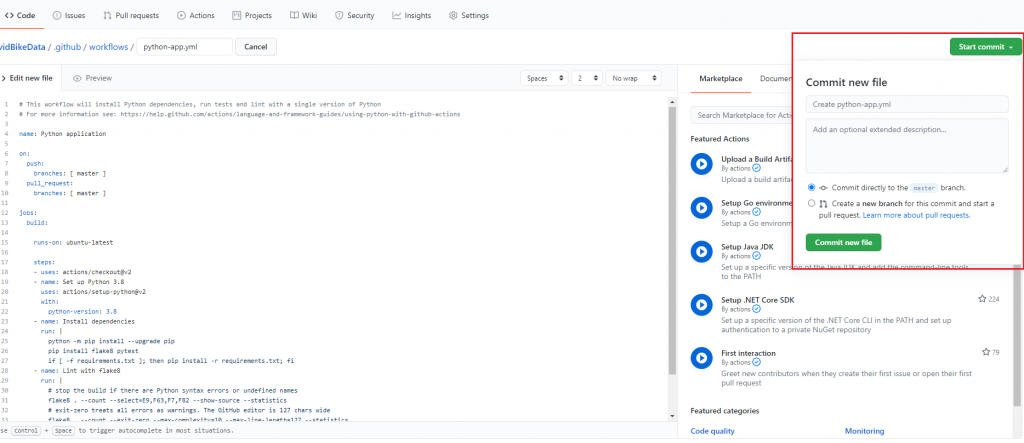

3. Now you’re given a chance to write your workflow. This requires some knowledge of how YAML files are written and a good understanding of Github and it’s actions, so read the docs! What I ultimately ended up with is below. I outlined my changes in red.

The prebuilt workflow set testing for push on master only, but I opted for it to be on push for all branches. In addition, I opted to lint with pylint instead of flake8 (just a personal preference) and test with unittest (again, personal preference).

4. Next, click on the button that says, “Start Commit” give it a comment and some description if you want, select from the two options, and then commit the new file.

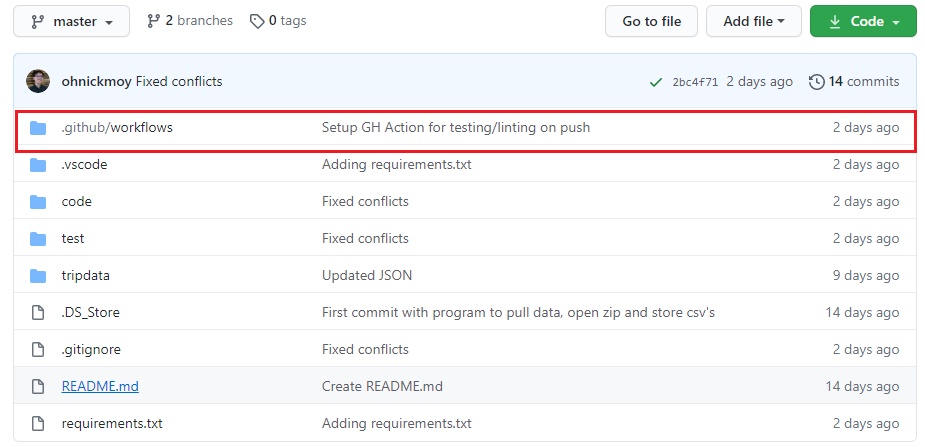

What results is a new folder in the repo that goes by the name of .github/workflows, assuming you didn’t change anything besides the filename.

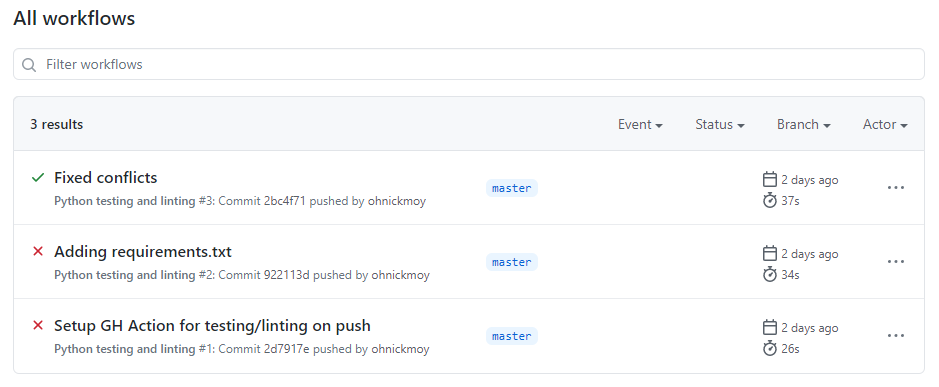

What follows now is that because of the way the workflow YAML was configured, it’ll fire up every time a commit was pushed to a branch. You can see what actions were executed the next time you click on the “Actions” tab in the repo page. For example:

You might notice on your commits a green checkmark or a red x depending on whether your tests fail or pass.

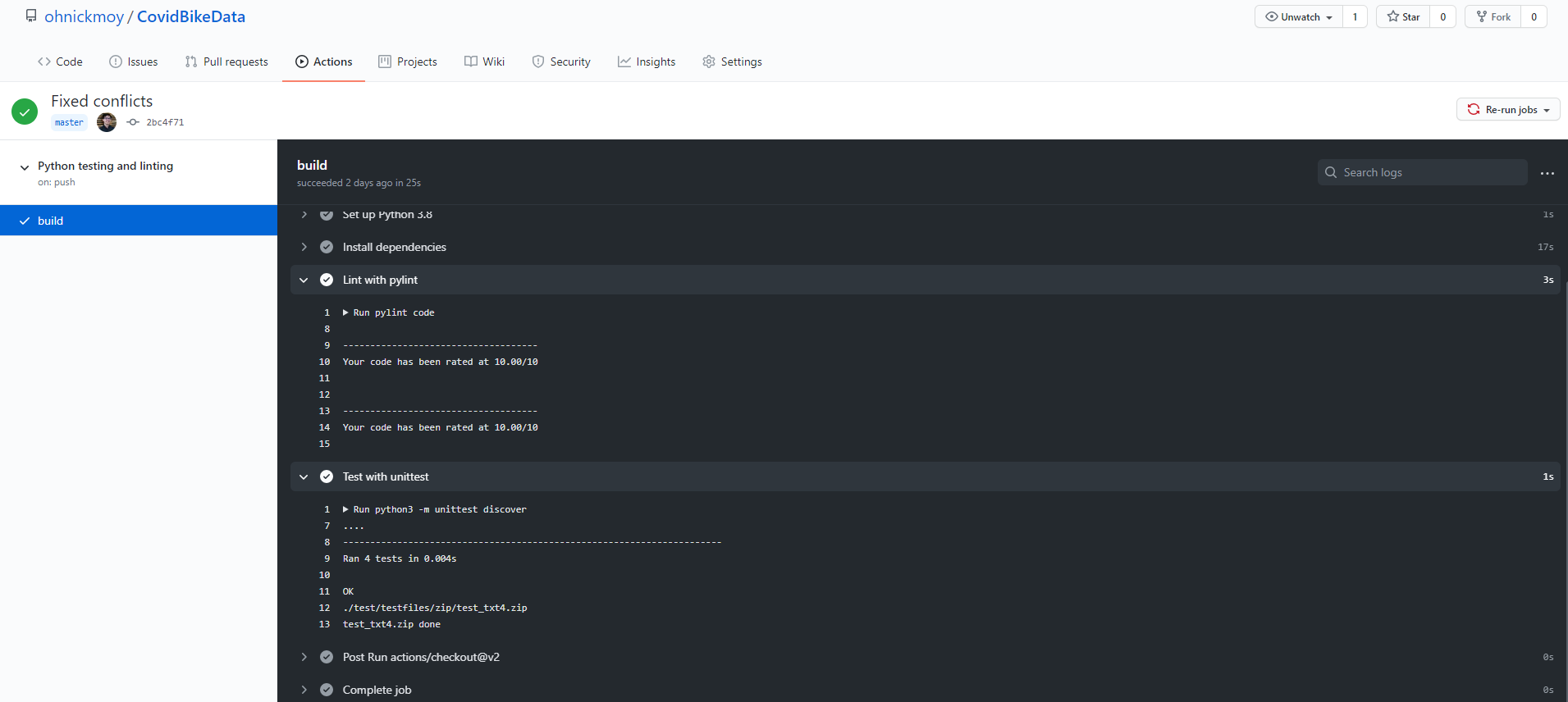

If you’re curious about the details, you can click on the commit message, like “Fixed conflicts” for example, and it’ll take you to the job details. Then you can check the build status for details. For instance…

This demonstrates that the code commited based on the action has passed.

And there you have it! A brief primer into Github actions.

Potential hurdles and looking into the future

Hurdles

Looking at the data already, I noticed something. While the zip files containing the CSV’s were around 100 megs or under, the CSV’s themselves are around 200-400 MB a piece, meaning data storage, retrieval, and analysis will get hefty pretty quickly over time. Which takes me to the first hurdle that I’m trying to address: storage and cost.

As most of you probably know, nothing (or very few things) come free, and so storing these large files on cloud servers that belong to Amazon or Google and retrieving them on an automated schedule every month could get costly, so there’s some work arounds that I’m mulling over such as:

- Create AWS account with free tier and store it that way

- Store 2-3 months worth with Git LFS

- Store data with https://github.com/actions/upload-artifact

I haven’t decided yet, but it’s something I’ve been thinking about since it occurred to me how expensive this can get for an unemployed guy.

Things to look forward to

Among the things I’m forward to, it’s the data visualization and publishing it to site, which builds toward creating the CI/CD pipline that I mentioned earlier.

And there you have it! I’m back to blogging, and I hope you’ll follow me on this journey on working on some new stuff.

Peace!